For people, being touched can initiate many different

reactions from comfort to discomfort, from intimacy to aggression. But how

might people react if they were touched by a robot? Would they recoil, or would

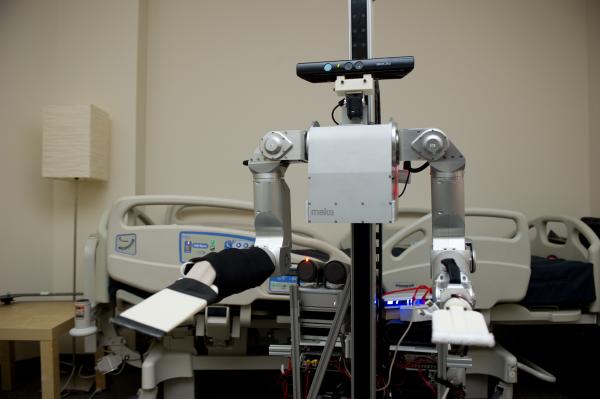

they take it in stride? In an initial study, researchers at the Georgia

Institute of Technology found people generally had a positive response toward

being touched by a robotic nurse, but that their perception of the robot’s

intent made a significant difference. The research is being presented today at

the Human-Robot Interaction conference in Lausanne, Switzerland.

“What we found was that how people perceived the intent of the

robot was really important to how they responded. So, even though the robot

touched people in the same way, if people thought the robot was doing that to

clean them, versus doing that to comfort them, it made a significant difference

in the way they responded and whether they found that contact favorable or

not,” said Charlie Kemp, assistant professor in the Wallace H. Coulter

Department of Biomedical Engineering at Georgia Tech and Emory University.

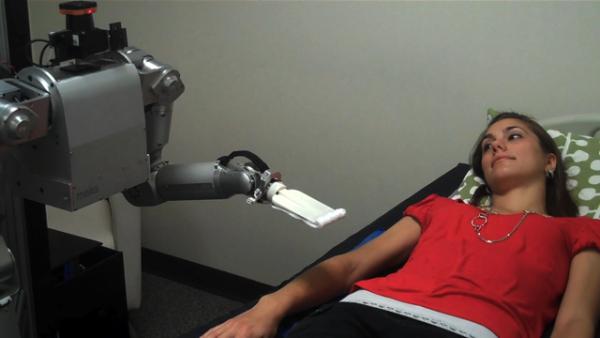

In the study, researchers looked at how people responded when

a robotic nurse, known as Cody, touched and wiped a person’s forearm. Although

Cody touched the subjects

in exactly the same way, they reacted more positively when they believed Cody

intended to clean their arm versus when they believed Cody intended to comfort

them.

These results echo similar studies done with nurses.

“There have been studies of nurses and they’ve looked at how

people respond to physical contact with nurses,” said Kemp, who is also an

adjunct professor in Georgia Tech’s College of Computing. “And they found that,

in general, if people interpreted the touch of the nurse as being instrumental,

as being important to the task, then people were OK with it. But if people

interpreted the touch as being to provide comfort … people were not so

comfortable with that.”

In addition, Kemp and his research team tested whether people

responded more favorably when the robot verbally indicated that it was about to

touch them versus touching them without saying anything beforehand.

“The results suggest that people preferred when the robot did

not actually give them the warning,” said Tiffany Chen, doctoral student at

Georgia Tech. “We think this might be because they were startled when the robot

started speaking, but the results are generally inconclusive.

Since many useful tasks require that a robot touch a person,

the team believes that future research should investigate ways to make robot

touch more acceptable to people, especially in healthcare. Many important

healthcare tasks, such as wound dressing and assisting with hygiene, would

require a robotic nurse to touch the patient's body,

“If we want robots to be successful in healthcare, we’re going

to need to think about how do we make those robots communicate their intention

and how do people interpret the intentions of the robot,” added Kemp. “And I

think people haven’t been as focused on that until now. Primarily people have

been focused on how can we make the robot safe, how can we make it do its task

effectively. But that’s not going to be enough if we actually want these robots

out there helping people in the real world.”

In addition to Kemp and Chen, the research group consists of

Andrea Thomaz, assistant professor in Georgia Tech’s College of Computing, and

postdoctoral fellow Chih-Hung Aaron King.

Media Contact

Georgia Tech Media Relations

Laura Diamond

laura.diamond@comm.gatech.edu

404-894-6016

Jason Maderer

maderer@gatech.edu

404-660-2926

Keywords

Latest BME News

Jo honored for his impact on science and mentorship

The department rises to the top in biomedical engineering programs for undergraduate education.

Commercialization program in Coulter BME announces project teams who will receive support to get their research to market.

Courses in the Wallace H. Coulter Department of Biomedical Engineering are being reformatted to incorporate AI and machine learning so students are prepared for a data-driven biotech sector.

Influenced by her mother's journey in engineering, Sriya Surapaneni hopes to inspire other young women in the field.

Coulter BME Professor Earns Tenure, Eyes Future of Innovation in Health and Medicine

The grant will fund the development of cutting-edge technology that could detect colorectal cancer through a simple breath test

The surgical support device landed Coulter BME its 4th consecutive win for the College of Engineering competition.